TL;DR

Testing GenAI applications goes beyond functional checks—it’s about validating data quality, model behavior, bias control, and performance under real-world scenarios.

In this blog, you’ll learn:

- The biggest challenges—data complexity, bias, and security risks like prompt injection.

- Proven testing methods like metamorphic, failure injection, and benchmark testing.

- How test automation ensures scalability, reliability, and continuous validation.

Want to make your GenAI apps reliable and secure?

As per Bloomberg intelligence, Generative AI applications industry is growing at a CAGR of 42% in the next 10 years. There’s a huge incoming demand for GenAI products and applications and they are expected to add about 280 billion of new software revenues in business. The numbers show the potential Generative AI holds and how it can drive new category creation for software products and services.

As we see spike in adoption of GenAI applications, there’s an increased debate about the large data sets, accuracy and performance, bias handling, and integration with other technology stack. This is where GenAI testing comes into picture. Just like any other software applications, Generative AI applications need to be tested thoroughly before releasing in the market.

Keep reading if your organizations plan to build or incorporate GenAI applications to enhance efficiency and productivity or have already implemented them.

Challenges of Testing Generative AI Applications

Testing GenAI applications come with unique challenges like complexity and variety of data, model testing and performance, removing bias, and unpredictability of various AI functionalities like natural language processing, computer vision, and machine learning. Overcoming these challenges require a comprehensive and innovative testing strategy tailored to the type of GenAI application and business use cases.

- Data Complexity and Diversity: Generative AI applications are built using large sets of data to generate output in form of text, audio, video, and more. These intricate algorithms involve advanced mathematical and statistical techniques making it difficult for teams to continuously test the underlying logic, evolving business use case and expected behavior of the system or application.

The quality of data directly influences the performance and behavior of GenAI applications.

- Biasness and Fairness: Inherent human biases like demographics, cultural, socioeconomic biases can influence accuracy of the GenAI models and applications. It’s also important to assess all these biases and see how the output may vary and adjust the data cleaning and preparation accordingly.

- Cybersecurity concerns for GenAI: While GenAI models have been adopted by all types of business functions and roles, they have unique security concerns. The two recent types of cybersecurity techniques to target GenAI models are Jailbreaking and Prompt Injection. In Jailbreaking, special prompts are created to manipulate AI model to generate wrong or misleading outputs.

In Prompt injection, attackers inject malicious or manipulated data to trick the model output. By doing so, these attackers use model capabilities to produce output that deviate significantly from the actual expected outputs.

- Performance of Gen AI applications: Generative AI applications ingest large amounts of data, learn from them, and then are expected to produce the desired output. For this to happen, it is highly important to think about performance and computational capacities of GenAI applications.

GenAI applications must be engineered to handle volumes of data, processing them, and produce the desired output. These applications must go through load, stress, volume, and variety testing to ensure they can handle the huge amounts of data without any downtime or errors.

Need help testing your GenAI application?

5 Key Testing Strategies for Generative AI Applications

Software testing strategies need to keep evolving as the new applications and technologies are making their ways in our day to day lives. Here are some of the testing strategies that work well with testing Generative AI applications:

- Data Validation Testing – Generative AI applications are as good as the data fed into them; hence data validation testing becomes extremely important. Data Validation is a type of software testing strategy that involves checking data quality, accuracy, consistency, and integrity of data.

By using ETL (Extract, Transform, and Load) and Data Integration tools, data has been extracted from one source and then fed into AI-ML models. This whole process can be automated using data test automation tools. Enhops has partnered with QuerySurge to cater to the rising data testing requirements of our clients.

- Model Testing – As easy as it sounds, Model Testing involves testing how well the in-built algorithms are working to give the right It involves testing of data, model performance testing, security and ethical testing, privacy considerations, and continuously testing these parameters to improve GenAI outputs over period.

- Failure Injections – Failure Injection testing is not so new technique and has been used by various tech giants including Netflix, Spotify and more. This testing practice involved injecting failures or wrong prompts (in case of GenAI applications) to detect how these applications are responding to these failures.

Some of the widely used failure injecting tools are Xception, beStorm, Holodeck, Grid-FIT, Orchestra, ExhaustiF, and The Mu Service Analyzer. Failure injection testing helps in understanding and assessing behavior of the GenAI applications. It also helps in identifying any system vulnerabilities and improving fault tolerant mechanism.

- Metamorphic Testing – Metamorphic testing is a new testing approach as compared to others. In a plain language, metamorphic testing involves providing morphed but similar types of inputs to assess the output of the GenAI applications.

GenAI applications work on the prompts provided by the end-users and hence it is highly important to validate their outputs based on the inputs provided.

- Benchmarking Testing – Benchmark testing refers to validating Generative AI applications against various GenAI tools available and comparing them with the best in the market. It helps in understanding how their AI models are working when compared to some others in the market, check for any anomalies, identify areas of risks and improvements, and establish state-of-the-art performance standards. These benchmarks often involve standardized datasets and evaluation metrics to assess the quality, diversity, and novelty of generated outputs.

How Test Automation can help in GenAI Application Testing

Test Automation ensures consistent high-quality output via rigorous testing of various functional and non-functional aspects of GenAI applications. GenAI applications are trained on variety and volume of data sets and test automation allows to scale up these testing efforts across various datasets and scenarios.

Test automation also ensures consistency throughout the testing ensuring that Generative AI applications are thoroughly covered across various browsers and scenarios. Automated regression, unit, and functional test make sure that new features don’t break existing ones and run as expected. Additional continuous testing in Continuous Integration and Continuous Deployment pipelines ensure Generative AI applications are thoroughly tested throughout the development lifecycle.

Optimize GenAI Testing with Human Touch

Generative AI applications are becoming more integrated part of our daily lives. To keep up with the customer expectations and increase in usage, it is essential for teams to test them thoroughly. As GenAI applications will mature, the testing strategies will mature too but human touch will be needed throughout the testing phases. Right from understanding the data quality and integrity to removing conscious and unconscious bias, human touch will amplify the testing of GenAI applications. Additionally, this will require more exploratory and user-focused ways of testing to remove out any biases and make sure AI models are working or improving as expected.

Meet Our AI Experts

Lakshman KavetiManaging Director, Data, AI &

App Dev, ProArch

Viswanath PulaAVP – Data & AI,

ProArch

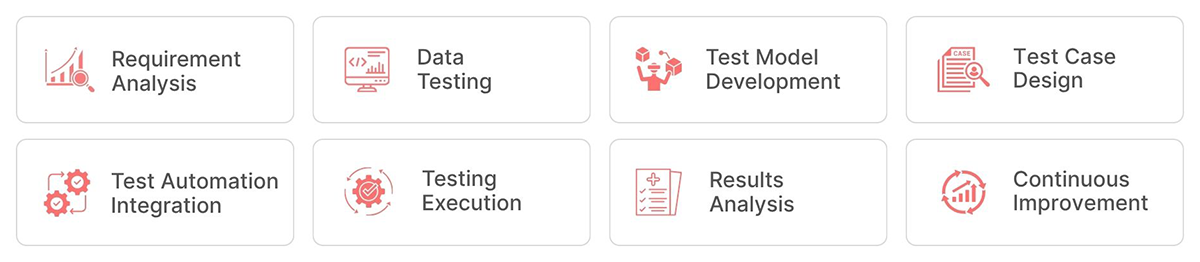

Enhops’ Approach towards Testing Generative AI Application

Enhops has been at the forefront of quality assurance and software testing. We have helped multiple clients test their hardware and software integrations, chatbots, virtual assistant applications, PLC devices, and more. This shows that our testing capabilities expand across multiple domains, industries, and technologies. With the advent of GenAI applications, we are prepared much in advance to test these applications using combination of human-exploratory and low-code, no-code test automation platforms. Our flagship offering Testing Center of Excellence is baked with processes, tools, and experts to cater to GenAI testing requirements.

For more details, get in touch with us at marketing@enhops.com